gRPC Load Balancing | The Essential Guide to Scalability and Efficiency

In today’s world of distributed systems, handling large volumes of traffic without issue crucial. This is especially true if you’re using gRPC, a high-performance Remote Procedure Call (RPC) framework developed by Google. With gRPC, proper load balancing is essential to keep things running smoothly, ensuring that each server shares the load evenly and no one server gets bogged down. In this guide, we’ll break down the essentials of gRPC load balancing, its different types, strategies, and some helpful best practices to keep your applications efficient and resilient.

And if you’re interested in expanding your knowledge on related tech, check out these helpful resources: What’s New in Go 1.22, Go 1.23 in 23 Minutes, and Mastering Go with GoLand. These articles cover the latest Go updates that might just improve your gRPC-based applications!

Want to learn more? You’ll learn everything you need to know and more in our Production-Ready Services with gRPC and Go course.

Why is gRPC Load Balancing Important?

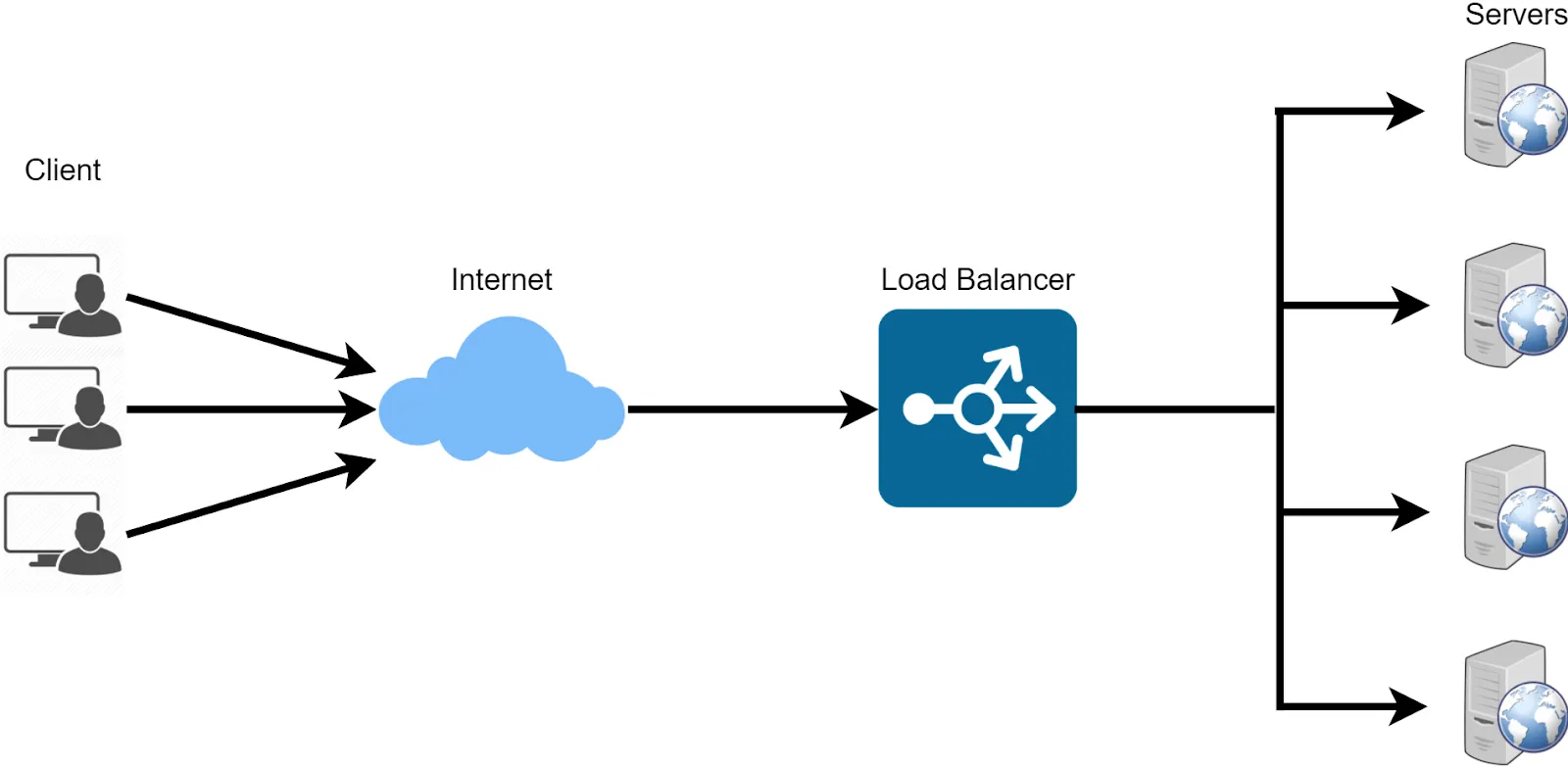

gRPC load balancing is all about distributing client requests across multiple servers. Imagine one server handling all requests—that server would be overloaded pretty quickly. With load balancing, though, client requests are spread out, ensuring every server does its fair share. This approach reduces response times, improves reliability, and helps applications stay up and running even during heavy traffic.

It looks something like this:

In a microservices world, where different services need to communicate seamlessly, gRPC is a popular choice due to its low-latency and high-throughput performance. But even with gRPC’s efficiency, having a solid load balancing strategy is crucial. Without it, traffic spikes or server downtime could result in bottlenecks, poor user experiences, and lost reliability. Load balancing optimizes how requests are routed, making sure your application’s performance is steady and scalable.

Types of Load Balancing in gRPC

To get the most out of gRPC, there are a few different ways you can approach load balancing, each with its own benefits depending on your setup and needs.

1. Client-side Load Balancing

With client-side load balancing, each gRPC client manages its own list of backend servers and picks which server to send requests to. The client makes this decision based on algorithms like round-robin or pick-first. This setup minimizes the need for external load balancers since the client itself is managing traffic distribution.

2. Server-side Load Balancing

In server-side load balancing, you have a load balancer sitting between your clients and backend servers. This intermediary receives all client requests and distributes them to the right server based on load, health, or other conditions. Server-side balancing is typically used in larger setups where the backend is dynamic, and scaling up or down is frequent.

3. DNS-based Load Balancing

DNS-based load balancing is the simplest option, rotating through IP addresses of servers based on Domain Name System (DNS) records. It’s a basic approach that doesn’t have real-time visibility into server health or load, so it’s better suited for applications with lighter traffic demands.

Key Load Balancing Strategies in gRPC

Now, let’s talk about a few specific load balancing strategies. Different strategies work well for different use cases, so here’s a breakdown to help you pick what’s best for your application.

Round-Robin Load Balancing

Round-robin is straightforward: requests are distributed evenly across all servers, one by one. This is great when you have servers with similar capacity and performance. It’s simple but might not account for server-specific loads, so it’s best in scenarios where your servers are uniform in capacity.

Pick-First Load Balancing

Pick-first load balancing involves choosing one server initially and sticking with it until an error occurs. This approach limits server switching, so connection management is simpler, but it can lead to some servers being picked more frequently than others, resulting in an uneven load distribution.

Weighted Load Balancing

Weighted load balancing is more nuanced, directing more requests to servers with higher capacity by assigning them weights. It’s especially useful when your servers have different processing capabilities or resources. Weighted balancing pairs well with health checks so you can avoid routing traffic to servers that aren’t performing well.

Least Connections

With least connections, new requests go to the server with the fewest active connections. It’s a real-time balancing act that can be effective in high-traffic environments, helping prevent overloads on any single server.

Best Practices for Implementing gRPC Load Balancing

Here are a few best practices to keep in mind when implementing gRPC load balancing. These tips should help you get the most out of your gRPC application’s performance and reliability.

1. Integrate Health Checks

Regular health checks are a must. They ensure that requests go only to servers that are up and running smoothly. You can set up custom probes or use options provided by load balancing tools like Envoy or NGINX. Health checks improve reliability by preventing traffic from hitting servers that aren’t fully functional.

2. Use Dynamic Configuration for Scaling

For applications with varying traffic patterns, consider using dynamic scaling tools like Kubernetes. Kubernetes allows you to add or remove server instances as needed. This flexibility helps keep costs down since you’re provisioning resources only when you need them.

3. Leverage gRPC-aware Load Balancers

Using a load balancer that’s gRPC-aware, like Envoy, Linkerd, or NGINX, provides added benefits since these tools understand gRPC’s HTTP/2 protocol. They offer traffic splitting, circuit breaking, and enhanced observability features, giving you better control over traffic distribution.

4. Monitor Performance Metrics

Keeping an eye on latency, error rates, server load, and connection counts is essential for effective load balancing. Tools like Prometheus and Grafana make it easy to track these metrics and alert you when thresholds are crossed. With monitoring, you can proactively adjust configurations before issues arise.

5. Consider Multi-layered Load Balancing

Combining client-side and server-side load balancing can give you a resilient, flexible setup. This approach is especially helpful in multi-cloud or hybrid environments, where clients might need to handle complex routing across various server locations.

FAQs About gRPC Load Balancing

1. What’s the best load balancing strategy for gRPC?

It depends on your setup. Round-robin is simple and a good starting point, but if your servers vary in capacity, weighted or least-connections might be more effective.

2. How does gRPC client-side load balancing differ from server-side?

With client-side balancing, each client decides which server to use, giving it more control. In server-side balancing, an external load balancer makes these decisions, which is great for centralized control in larger deployments.

3. Is DNS load balancing enough for gRPC applications?

DNS load balancing works, but it’s limited. It doesn’t consider server load or health, which can lead to imbalanced traffic. For high-demand applications, client-side or server-side balancing is usually more reliable.

4. Can gRPC load balancing work with Kubernetes?

Absolutely. Kubernetes has built-in load balancing through services and Ingress controllers, which can handle gRPC traffic well. You can also use service meshes like Istio for more advanced traffic management.

5. What tools support advanced gRPC load balancing?

Envoy and NGINX are popular choices. They support gRPC-specific features like circuit breaking and traffic splitting, making them great for managing high-demand environments.

Wrapping Up

Implementing gRPC load balancing is key to building scalable, resilient systems. Whether you go with client-side, server-side, or DNS-based load balancing, each approach has benefits depending on your setup. By exploring strategies like round-robin, weighted, and least-connections balancing, and applying best practices like health checks, dynamic scaling, and monitoring, you can ensure your gRPC applications are both efficient and reliable.

And if you’re using Go for your gRPC setup, don’t forget to stay current with updates: Go 1.23 in 23 Minutes and Mastering Go with GoLand are excellent to watch to get the most out of your Go-based applications. By staying updated and following best practices, you’re setting up your gRPC systems for long-term success!

Finally, if you truly want to master gRPC, you’ll learn everything you need to know and more in our Production-Ready Services with gRPC and Go course.