Everything You Need to Know about Logging in Go

In this blog post, we’ll dive into the world of logging and logging strategies for Go Engineers. We’re going to start by talking about logging generally before moving on to structured logs and how they differ from the unstructured logs you might be used to.

Logging is an essential part of the software development process, and usually the first debugging technique Go engineers learn; because of this its one of the first things I teach in my book.

Whether you’re a seasoned developer or just starting out, this blog post will equip you with the knowledge and skills necessary to use logging effectively, ensuring that you can tackle debugging challenges with confidence and efficiency.

Everything below is an excerpt from my new book, Foundations of Debugging for Golang. If you enjoy this post and learn something, the book is probably for you too.

Logging Locally with the fmt package

Go has several ways to log, the simplest being the fmt package. Most Go engineers when starting out will learn about this package pretty early and it’s a great place to start your logging journey. Let’s go through some of the most common functions you might want to use.

fmt.Print

This function prints an argument to the standard output. It’s straightforward to use:

fmt.Print("hello")

Which would simply output hello.

Even for complex data types like structs, fmt.Print handles the formatting in an easy to consume format. Consider the following code:

type user struct {

name string

age int

}

u := user{

name: "Matt",

age: 3,

}

fmt.Print(u)

Will print {matt 3}. Simple, but effective.

fmt.Println

This function is similar to fmt.Print but adds a new line character at the end, ensuring each print statement appears on a new line. This can make the output more readable, especially when printing multiple lines.

type user struct {

name string

age int

}

u := user{

name: "Matt",

age: 3,

}

fmt.Println(u)

fmt.Println(u)

Would print:

{Matt 3}

{Matt 3}

As you can see, each output it printed on a new line.

fmt.Printf

This function allows you to format the output using placeholders. It’s one of the most commonly used printing functions, offering flexibility and readability.

type user struct {

name string

age int

}

u := user{

name: "Matt",

age: 3,

}

fmt.Printf("The user’s name is %s", u.name)

Will print:

The user’s name is Matt.

There are different placeholders for different types. Here we used %s which is the placeholder for strings, but I also commonly use %v (for printing structs), and %d (for printing integers).

The fmt package will take you a surprisingly long way. Whilst you’re just starting out, you should embrace it. However, it is missing a couple of features that can make our logs a little more useful. Let’s take a look at another standard library package that can make our logs a little more awesome.

Upgrading Logs With the Log Package

While the fmt package is great for quick and simple logging, the log package offers more robust and configurable logging capabilities. It provides features like automatic timestamping, severity levels, and the ability to write logs to different output streams. We will not spend too much time talking about the log package, as newer versions of Go include another package (slog) that I think is even better than this one, especially for production. This is because slog has more powerful and expressive API that allows us to embed data into the logs in a cleaner way. However, for local logging, the log package is a decent middle ground.

The log package defines a Logger type with methods for formatting output, as well as helper functions for common logging tasks. It also includes additional functions like Fatal and Panic, which can be used to handle critical errors and exceptional situations.

Let’s look at our basic example from above again:

log.Print("hello")

Outputs:

2024/03/31 Hello

This automatic timestamp can prove incredibly useful, especially if our logs are going to be ingested into another system, to ensure order is preserved.

The API for the log package is very similar to that of fmt. All of the below will work:

type user struct {

name string

age int

}

u := user{

name: "Matt",

age: 3,

}

log.Print("Hello")

log.Println("Hello")

log.Printf("The user’s name is %s", u.name)

As mentioned, the package also has log.Fatal:

log.Fatal("oops")

log.Print("Hello")

The above code will print: oops but not hello. This is because log.Fatal logs a message and then calls os.Exit, terminating the program. This function should be used sparingly, and typically only in the main function, when the program encounters a critical error and cannot continue executing.

Similarly, the log.Panic function logs a message and then panics, which I recommend you almost never use. This is because panicking in code is the equivalent of an “uncontrolled explosion”. Your application will shut down and you do not get the ability to shut down any dependencies - for example closing a database connection.

One of the advantages of using the log package is its customizability. It allows you to control the output format, including timestamps, file paths, and line numbers. However, for simple local debugging, the default settings may be sufficient. The log package provides a straightforward way to get started with logging without the need for extensive configuration.

Whilst the log package is a great tool for local debugging, it may not be the best choice for production environments, for the reasons we outlined above. The slog package offers advanced features like structured logging, which can provide better organization and filtering capabilities for your logs. We’ll look at that in the next section.

So Which should I use for local logging?

If you’re working on a small project or simply need to quickly debug an issue locally, the fmt package is likely the better choice. Its simplicity and ease of use make it a great starting point for developers of all skill levels.

Once you are ready to move to production, I recommend slog.

Slog

In Go 1.21, slog was introduced to the standard library. Before this, structured logging had to either be built manually or by using a third party library (I typically used Zap from Uber).

The slog package is a structured leveled logger and enables us to turn logs that look like this using the log package:

2009/11/10 23:00:00 hello, world{matt 30}

Into the following using slog:

2009/11/10 23:00:00 INFO hello, world impacted_user="{user:matt age:30}"

As you can see we have gained the INFO keyword and the ability to add attributes that have printed in a JSON format. This is structured, leveled logging and is how I recommend you log for production.

Structured Logging

The problem with logs generally is they are unstructured. This means they do not adhere to a consistent, predefined format, making them hard to query and sort, which are two traits that are pretty critical if we intend for our logs to be ingested into another system so we can use them for debugging.

For log files to be human-readable, we commonly structure them in JSON format. Slog is our means to do that easily in Go. We saw a basic example of info logging with slog previously, but you may have noted that the output was still not in JSON format. To do that we need to do something like the following:

logger := slog.New(

slog.NewJSONHandler(

os.Stdout,

nil

),

)

logger.Info("hello, world",

"user", "Matt",

)

Which will produce:

{"time":"2023-08-04T16:58:02.939245411-04:00","level":"INFO","msg":"hello, world","user":"Matt"}

We chose to write to os.Stdout here, but you could also write directly to a file or another system too.

We saw previously that we could attach key/value pairs to logs such as a struct or a just a user’s name. This is useful, but would get annoying if you wanted to do it on every log. You can use LogAttrs to attach key value pairs to every log instead. This would look as follows:

slog.LogAttrs(

context.Background(),

slog.LevelInfo,

"hello, world",

slog.String("user", "Matt")

)

As you can see, LogAttrs takes a context. This means a handler can access this and pull out values such as the trace ID (we talk about tracing in more detail in the book).

Here’s a slightly more complex and real-world example. Consider a scenario where you are building a web application and want to include user-specific information in your logs for every request. Without slog.LogAttrs, you would need to manually add the user information to each log entry, which is repetitive and error-prone. With slog.LogAttrs, you can set these attributes once and have them automatically included in all subsequent logs.

func handleRequest(ctx context.Context, userID string) {

ctx = context.WithValue(ctx, "userID", userID)

slog.LogAttrs(

ctx,

slog.LevelInfo,

"Processing request",

slog.String("userID", userID)

)

slog.LogAttrs(

ctx,

slog.LevelInfo,

"Request processed successfully",

slog.String("userID", userID)

)

}

There is much more to slog, but beyond log levels, you now know near enough everything you need to know to start producing structured logs!

Log Levels

Log levels are a way to distinguish between different types of log messages based on their importance or severity. The slog package supports several log levels out of the box, including Info, Warning, Error, and Debug. By default, slog outputs all logs at the Info level and above.

You can adjust the log level dynamically using the slog.Level option. For example, to set the log level to Error and above, you can use the following code:

opts := &slog.HandlerOptions{

Level: slog.LevelError,

}

logger := slog.New(slog.NewJSONHandler(os.Stdout, opts))

err := errors.New("some-error")

logger.Info(

"Hello World",

slog.String("meta_info", "something else"),

slog.Int("account_id", 35464),

slog.Any("err", err),

)

logger.Error(

"Hello World",

slog.String("meta_info", "something else"),

slog.Int("account_id", 35464),

slog.Any("err", err),

)

This code would only output:

{"time":"2009-11-10T23:00:00Z","level":"ERROR","msg":"Hello World","meta_info":"something else","account_id":35464,"err":"some-error"}

The info log would be completely ignored because the log level has been set to error which is a higher severity than info. For completeness, here is all the log levels provided by the slog package, in order of priority:

Debug < Info < Warning < Error < Critical

Creating a Logging Strategy

I recommend keeping things simple and only having two log levels in your application, Info and Error.

By default, your log level should be set to Error, but you can enable Info by switching an environment variable. This stops your logging systems from becoming overwhelmed with noise generally, but does enable you to “turn it up” for periods of time if you need to, without having to make code changes and redeploy your application.

Generally, logging should happen at the “edge” of your application. This means I tend to only log in main(), http handlers or gRPC service implementations. Following this approach centralizes error handing, helps to reduce noise, and forces you to think about user impact. To do this successfully, you will need to use fmt.Errorf to “wrap” errors further down your stack to bubble them up to the layer in which we can log them. Here’s an example:

// readConfig simulates reading a configuration file.

func readConfig(path string) (*config, error) {

_, err := os.ReadFile(path)

if err != nil {

return nil, fmt.Errorf(

"failed_to_read_config_file: %w",

err

)

}

return &config{}, nil

}

// initApp initializes the application and returns an error if it fails.

func initApp() error {

_, err := readConfig("config.txt")

if err != nil {

return fmt.Errorf(

"initialization failed: %w",

err

)

}

return nil

}

func main() {

logger := slog.New(

slog.NewJSONHandler(

os.Stdout,

nil

)

)

err := initApp()

if err != nil {

logger.Fatal(

"App startup failed",

slog.Error("err", err)

)

}

logger.Info("App started successfully")

}

The above also showcases two different log levels happening. We have a call to Fatal, which will log then terminate our application. We would likely be always interested in seeing these in standard out, or pushing them to our logging tool. However, the info log might be something we enable if we are debugging, but not something we need to see always.

Deciding if a log should be info or error is not a perfect science. My advice is to use error log if something is actually an error; for example saving to the Database failed, a call to a Stripe failed, a file you tried to read didn’t exist.

If you are unsure, start with an info log and upgrade it.

What should I do with all these logs?

have mentioned a few times in this chapter about logging systems and using your logs to debug, but how do you actually do that if they are all just going to standard out?

The simplest thing you can do is output logs to a file. You then have the ability to review these during or after an event to piece together what is happening. This is very challenging and does not scale very well.

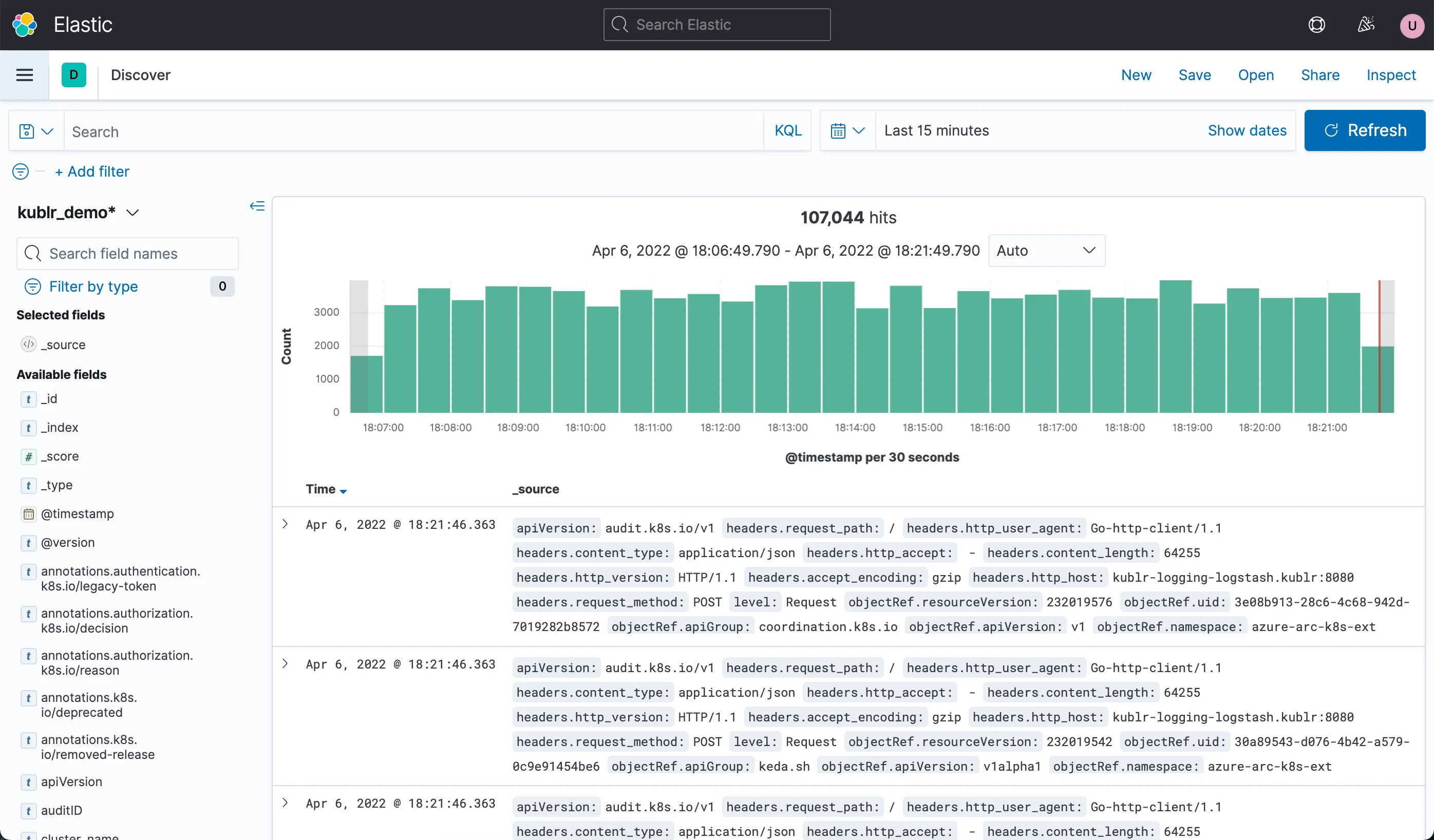

A common next step is to push your logs into something like ElasticSearch and view them with Kibana. In my book I include a guided exercise to configure this yourself, so check it out and give it a go!

Once your logs are in something like Kibana, you have the ability to run queries against them, build dashboards and even setup alerts if you see an increase in a certain amount of logs within a time period.

Having either Kibana or similar setup is, in my opinion, the minimum that should be done before running a system in production. If you are serving customers and don’t have this level of observability setup, I urge you to spend some time next week making it so. There are both great cloud and open-source options so there is no excuse!

Wrapping up

I hope you have a good understanding of how to approach logging in your next Go project. Before you share this post on Reddit or in your Slack and close the tab, I want to provide a couple of warnings for completeness sake. The below is the equivalent of the disclaimer that shows up before you download software that you’re probably not going to read, but I wouldn’t sleep at night if I didn’t share it!

-

Excessive logging can introduce significant overhead to your system’s performance. Each log operation consumes CPU cycles and I/O bandwidth.

-

High volumes of logging can increase response times, impacting user experience, especially in high-transaction systems (This is why toggling between log levels is a good idea in my opinion).

-

Logging too much data can lead to rapid consumption of storage space, leading to increased costs and management overhead.

-

Managing, archiving, and purging large volumes of logs can become a challenging and resource-intensive task.

-

Overlogging may inadvertently include sensitive information, posing a risk to data privacy and violating compliance standards. A larger volume of logs increases the risk of exposing critical information in the event of a security breach.

-

Too much log data can obscure important information, making it harder to identify critical issues amidst the noise.

-

More logging means more storage and processing power, leading to higher infrastructure costs.

-

The cost of managing and maintaining large log systems, including staff time and tools, can be high.

Phew! That’s a lot to take in. Remember, logging is a powerful tool that, when used effectively, can greatly enhance your ability to monitor and debug your applications. By considering these points, you can ensure that your logging strategy is both effective and efficient. Logging can often be one of the first places to gain performance improvements in your Go application. I teach you how to do that in my book.

One Final Thing…

Throughout this blog post, I have shared my views on how to manage all of the above, but I really recommend you read this x thread when some folks have a different view than me. I always advise reading widely and considering different opinions!